CleverMaps Shell is controlled solely by a set of predefined commands. These commands can be divided in 4 categories, or workflow states. All commands and parameters are case sensitive.

Each command can be further specified by parameters, which can have default values. Each parameter also has a "--" prefix, which is a technicality, and is not mentioned in the tables below, for the sake of readability.

Parameters with string type take string input as a value, if they are mentioned. Parameters with enum type have predefined enumeration of strings, which can be passed as a value. Parameters with boolean type can be passed either true, false or no value (=true).

Workflow states

- Started - you have started the tool

- Connected to server - you have successfully logged in to your account on a specific server

- Opened project - you have opened a project you have access to

- Opened dump - you have created a new dump, or opened an existing one

_create

_

Started state

login

Log in as a user to CleverMaps with correct credentials.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

email

| string | | your email account | stored in credentials file |

password | string | | your password | stored in credentials file |

accessToken | string | | generated CleverMaps access token (see how to get one) | stored in config file |

bearerToken | string | | JWT token generated after signing with limited 1h validity |

|

dumpDirectory | string | | directory where your dumps will be stored | stored in config file |

server | string | | server to connect to default = https://secure.clevermaps.io | stored in config file |

proxyHost | string | | proxy server hostname | stored in config file |

proxyPort | integer | | proxy server port | stored in config file |

s3AccessKeyId | string | | AWS S3 Access Key ID required for S3 upload (loadCsv --s3Uri) | stored in config file |

s3SecretAccessKey | string | | AWS S3 Secret Access Key required for S3 upload (loadCsv --s3Uri) | stored in config file |

setup

Store your config and credentials in a file so you don't have to specify them each time you log in.

Connected to server

| Anchor |

|---|

| openProjectAnchor |

|---|

| openProjectAnchor |

|---|

|

openProject

Open a project and set as current.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

project

| string | | Project ID of project to be opened |

|

| Anchor |

|---|

| listProjectsAnchor |

|---|

| listProjectsAnchor |

|---|

|

listProjects

List all projects avaliable to you on the server.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

verbose | boolean | | specifies if the output should be more verbose |

|

share | enum | | list projects by share type | [demo, dimension, template] |

organization | string | | list projects by organization (organization ID) |

|

| Anchor |

|---|

| createProjectAnchor |

|---|

| createProjectAnchor |

|---|

|

createProject

Create a new project and opens it.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

title | string | | title of the project |

|

description | string | | description of the project. A description can be formatted by markdown syntax. |

|

organization | string | | ID of the organization which will become the owner of the project |

|

| Anchor |

|---|

| editProjectAnchor |

|---|

| editProjectAnchor |

|---|

|

editProject

Edit project properties.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

project | string | | Project ID of project to be edited |

|

newTitle | string | | new title of the project |

|

newDescription | string | | new description of the project |

|

newStatus | string | | new status of the project | [enabled, disabled] |

newOrganization | string | | new ID of the organization which will become the owner of the project |

|

| Anchor |

|---|

| deleteProjectAnchor |

|---|

| deleteProjectAnchor |

|---|

|

deleteProject

Delete an existing project.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

project | string | | Project ID of the project to be deleted |

|

Opened project

| Anchor |

|---|

| importProjectAnchor |

|---|

| importProjectAnchor |

|---|

|

importProject

Allows you to import either:

You can also import a part of a project with one of the parameters (dashboards, datasets, indicators, indicatorDrills, markers, markerSelectors, metrics, views). If you specify none of these parameters, the whole project will be imported. Everytime you specify a datasets parameter, corresponding data will be imported.

Before each import, validate command is called in the background. If there are any model validation violations in the source project, the import is stopped, unless you also provide the --force parameter.

During the import, the origin key is set to all metadata objects. This key indicates the original location of the object (server and the project). This has a special use in case of datasets & data import. import first takes a look at what datasets currently are in the project and compares them with datasets that are about to be imported. Datasets that are not present in the destination project will be imported automatically. In case of datasets that are present in the destination project, 3 cases might occur:

- if they have the same name and origin, the dataset will not be imported

- if they have the same name but different origin, a warning is shown and the dataset will not be imported

- if a

prefix is specified, all source datasets will be imported

| Parameter name | Type | Optionality | Description | Constraints |

|---|

server | string | | Hostname of the server from which the project will be imported default = https://secure.clevermaps.io |

|

project | string | | Project ID of the project from which files will be imported  either either project or dump must be specified

|

|

dump | string | | ID of a project dump to be imported  either either project or dump must be specified

|

|

cascadeFrom | string | | cascade import object and all objects it references see usage examples below |

|

prefix | string | | specify a prefix for the metadata objects and data files |

|

dashboards | boolean | | import dashboards only |

|

datasets | boolean | | import datasets only |

|

indicators | boolean | | import indicators only |

|

indicatorDrills

| boolean | | import indicator drills only |

|

markers | boolean | | import markers only |

|

markerSelectors | boolean | | import marker selectors only |

|

metrics | boolean | | import metrics only |

|

projectSettings | boolean | | import project settings only |

|

shares | boolean | | import shares only |

|

views | boolean | | import views only |

|

force | boolean | | ignore source project validate errors and proceed with import anyway skip failed dataset dumps (for projects with incomplete data) default = false |

|

skipData | boolean | | skip data import default = false |

|

Usage examples:

| Code Block |

|---|

| theme | Midnight |

|---|

| title | Cascade import examples |

|---|

|

// import all objects referenced from catchment_area_view including datasets & data

importProject --project djrt22megphul1a5 --server --cascadeFrom catchment_area_view

// import all objects referenced from catchment_area_view except including datasets & data

importProject --project djrt22megphul1a5 --server --cascadeFrom catchment_area_view --dashboards --exports --indicatorDrills --indicators --markerSelectors --markers --metrics --views

// import all objects referenced from catchment_area_dashboard

importProject --project djrt22megphul1a5 --server --cascadeFrom catchment_area_dashboard

// import all objects (datasets) referenced from baskets dataset - data model subset

importProject --project djrt22megphul1a5 --server --force --cascadeFrom baskets |

| Anchor |

|---|

| importDatabaseAnchor |

|---|

| importDatabaseAnchor |

|---|

|

importDatabase

Allows you to create datasets and import data from an external database.

This command reads the database metadata from which datasets are created, then imports the data and saves them as CSV files. You can choose to import either of which with --skipMetadata and --skipData parameters. Please note that this command does not create any other metadata objects than datasets. It's also possible to import only specific tables using the --tables parameter.

The database must be located on a running database server which is accessible under an URL. This can be on localhost, or anywhere on the internet. Valid credentials to the database are of course necessary.

So far, the command supports these database engines:

| Parameter name | Type | Optionality | Description | Constraints |

|---|

engine | enum | | name of the database engine | [postgresql] |

host | string | | database server hostname for local databases, use localhost |

|

port | integer | | database server port |

|

schema | string | | name of the database schema leave out if your engine does not support schemas, or the schema is public |

|

database | string | | name of the database |

|

user | string | | user name for login to the database |

|

password | string | | user's password |

|

tables | array | | list of tables to import leave out if you want to import all tables from the database example = "orders,clients,stores" |

|

skipData | boolean | | skip data import default = false |

|

skipMetadata | boolean | | skip metadata import default = false |

|

Usage examples:

| Code Block |

|---|

|

importDatabase --engine postgresql --host localhost --port 5432 --database my_db --user postgres --password test

importDatabase --engine postgresql --host 172.16.254.1 --port 6543 --schema my_schema --database my_db --user postgres --password test --tables orders,clients,stores |

| Anchor |

|---|

| loadCsvAnchor |

|---|

| loadCsvAnchor |

|---|

|

loadCsv

Load data from a CSV file into a specified dataset.

loadCsv also offers various CSV input settings. Your CSV file may contain specific features, like custom quote or separator characters. The parameters with the csv prefix allow you to configure the data load to fit these features, instead of transforming the CSV file to one specific format. Special cases include the csvNull and csvForceNull parameters.

csvNull allows you to specify a value, which will be interpreted as a null valuecsvForceNull then specifies on which columns the custom null replacement should be enforced- e.g. "

name,title,description"

| Parameter name | Type | Optionality | Description | Constraints |

|---|

file | string | | path to the CSV file  one of one of file, s3Uri or url parameters must be specified

|

|

s3Uri | string | | URI of an object on AWS S3 to upload (see examples below)  one of one of file, s3Uri or url parameters must be specified

|

|

url | string | | HTTPS URL which contains a CSV file to be loaded into the dataset  one of one of file, s3Uri or url parameters must be specified

|

|

dataset | string | | name of dataset into which the data should be loaded |

|

mode | enum | | data load mode incremental mode appends the data to the end of the table

full mode truncates the table and loads the table anew

| [incremental, full] |

csvHeader

| boolean | | specifies if the CSV file to upload has a header default = true |

|

csvSeparator | char | | specifies the CSV column separator character default = , |

|

csvQuote | char | | specifies the CSV quote character default = " |

|

csvEscape | char | | specifies the CSV escape character default = \ |

|

csvNull | string | | specifies the replacement of custom CSV null values |

|

csvForceNull | enum | | specifies which CSV columns should enforce the null replacement |

|

verbose | boolean | | enables more verbose output default = false |

|

multipart | boolean | | enables multipart file upload (recommended for files larger than 2 GB) default = false |

|

gzip | boolean | | enables gzip compression default = true |

|

Usage examples:

Please note that your AWS S3 Access Key ID and Secret Access Key must be set using setup command first.

| Code Block |

|---|

| theme | Midnight |

|---|

| title | Load CSV from AWS S3 |

|---|

|

loadCsv --dataset orders --mode full --s3Uri s3://my-company/data/orders.csv --verbose |

| Code Block |

|---|

| theme | Midnight |

|---|

| title | Load CSV from HTTPS URL |

|---|

|

loadCsv --dataset orders --mode full --url http://www.example.com/download/orders.csv --verbose |

| Anchor |

|---|

| dumpCsvAnchor |

|---|

| dumpCsvAnchor |

|---|

|

dumpCsv

Dump data from a specified dataset into a CSV file. Creates new dump with dumped data.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

directory | string | | path to a directory where project's dump will be created | stored in config file |

dataset | string | | name of the dataset do dump |

|

| Anchor |

|---|

| dumpProjectAnchor |

|---|

| dumpProjectAnchor |

|---|

|

dumpProject

Dump project data and metadata to a directory. If the dump if successfull, the current dump is opened.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

directory | string | | path to a directory to which the dump will be saved | stored in config file |

skipMetadata | boolean | | skip metadata dump default = false |

|

skipData | boolean | | skip data dump default = false |

|

force | boolean | | skip failed dataset dumps (for projects with incomplete data) default = false |

|

nativeDatasetsOnly | boolean | | import only native datasets (without origin attribute) default = false |

|

| Anchor |

|---|

| listDumpsAnchor |

|---|

| listDumpsAnchor |

|---|

|

listDumps

List all project dumps in a local directory.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

directory | string | | path to a parent directory of a specific project's dumps | stored in config file |

| Anchor |

|---|

| openDumpAnchor |

|---|

| openDumpAnchor |

|---|

|

openDump

Open a specific dump.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

directory | string | | path to a parent directory of a specific project's dumps | stored in config file |

dump | string | | open a specified dump (eg. 2019-01-01_12-00-00) if not specified, latest dump is opened |

|

| Anchor |

|---|

| truncateProjectAnchor |

|---|

| truncateProjectAnchor |

|---|

|

truncateProject

Deletes all metadata and data from the project.

This command has no parameters.

| Anchor |

|---|

| validateAnchor |

|---|

| validateAnchor |

|---|

|

validate

Validate the project's data model and data integrity.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

project | string | | project ID of other project which will be validated |

|

skipModel | string | | skip validations of the data model default = false |

|

skipData | string | | skip validations of the data itself default = false |

|

Opened dump

Add a new metadata object and upload it to the project. The file must be located in a currently opened dump, and in the correct directory.

If the --objectName parameter is not specified, addMetadata will add all new objects in the current dump.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

objectName | string | | name of the object (with or without .json extension) |

|

| Info |

|---|

| title | Updating existing metadata objects |

|---|

|

When modifying already added (uploaded) metadata objects use pushProject command for uploading modified objects to the project. |

Create a new metadata object.

At this moment, only dataset type is supported. Datasets are generated from a provided CSV file.

At this moment, only dataset type is supported. Datasets are generated from a provided CSV file.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

type | enum | | type of the object | [dataset]

|

objectName | string | | current name of the object (with or without .json extension) |

|

subtype | enum | | name of the object copy (with or without .json extension) required only for dataset type | [basic, geometryPoint, geometryPolygon] |

file | string | | path to the CSV file (located either in dump, or anywhere in the file system) required only for dataset type |

|

primaryKey | string | | name of the CSV column that will be marked as primary key if not specified, first CSV column is selected |

|

geometry | string | | name of the geometry key required only for geometryPolygon subtype |

|

csvSeparator | char | | specifies custom CSV column separator character default = , |

|

csvQuote | char | | specifies custom CSV column quote character default = " |

|

csvEscape | char | | specifies custom CSV column escape character default = \ |

|

Usage examples:

| Code Block |

|---|

|

createMetadata --type dataset --subtype basic --objectName "baskets" --file "baskets.csv" --primaryKey "basket_id"

createMetadata --type dataset --subtype geometryPoint --objectName "shops" --file "shops.csv" --primaryKey "shop_id"

createMetadata --type dataset --subtype geometryPolygon --objectName "district" --geometry "districtgeojson" --file "district.csv" --primaryKey "district_code" |

Remove a metadata object from the project and from the dump. The file must be located in a currently opened dump, and must not be new.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

objectName | string | | name of the object (with or without .json extension)  one of one of objectName, objectId or orphanObjects parameters must be specified

|

|

objectId | string | | id of the object |

|

orphanObjects | boolean | | prints a list of removeMetadata commands to delete orphan metadata objects orphan object is an object not referenced from any of the project's views, or visible anywhere else in the app |

|

Rename an object in a local dump and on the server. If the object is referenced in some other objects by URI (/rest/projects/$projectId/md/{objectType}?name=), the references will be renamed as well.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

objectName | string | | current name of the object (with or without .json extension) |

|

newName | string | | new name of the object (with or without .json extension) |

|

Create a copy of an object existing in a currently opened dump.

This command unwraps the object from a wrapper, renames it and removes generated common syntax keys. If the objectName and newName arguments are the same, the object is unwrapped only.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

objectName | string | | current name of the object (with or without .json extension) |

|

newName | string | | name of the object copy (with or without .json extension) |

|

| Anchor |

|---|

| pushProjectAnchor |

|---|

| pushProjectAnchor |

|---|

|

pushProject

Upload all modified files (data & metadata) to the project.

This command basically wraps the functionality of loadCsv. It collects all modified metadata to upload, and performs full load of CSV files.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

skipMetadata | boolean | | skip metadata push |

|

skipData | boolean | | skip data push |

|

skipValidate | boolean | | skip the run of validate after push |

|

force | boolean | | force metadata push when there's a share breaking change |

|

verbose

| boolean | | enables more verbose output default = false |

|

multipart | boolean | | enables multipart file upload (recommended for files larger than 2 GB) default = true |

|

gzip | boolean | | enables gzip compression default = true |

|

status

Check the status of a currently opened dump against the project on the server.

This command detects files which have been locally or remotely changed, and also detects files which have a syntax error or constraint violations.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

remote | - | | list metadata contents on the server |

|

| Anchor |

|---|

| applyDiffAnchor |

|---|

| applyDiffAnchor |

|---|

|

applyDiff

Create and apply metadata diff between two live projects.

This command compares all metadata objects of the currently opened project and project specified in the --sourceProject command, and applies changes to the currently opened dump. Metadata objects in dump can be either:

- added (completely new objects that aren't present in currently opened project)

- modified

- deleted (deleted objects not present in the

sourceProject)

When the command finishes, you can review the changes applied to the dump using either status or diff comands. The command then tells you to perform specific subsequent steps. This can be one of (or all) these commands:

addMetadata (to add the new objects)pushProject (to push the changes in modified objects)removeMetadata (to remove the deleted objects - a command list which must be copypasted to Shell is generated  )

)

| Parameter name | Type | Optionality | Description | Constraints |

|---|

sourceProject | string | | id of the source project |

|

objectTypes | string | | list of object types to be compared example = "views,indicators,metrics" |

|

diff

Compare local metadata objects with those in project line by line.

If the --objectName parameter is not specified, all wrapped modifed objects are compared.

| Parameter name | Type | Optionality | Description | Constraints |

|---|

objectName | string | | name of a single object to compare (with or without .json extension) |

|

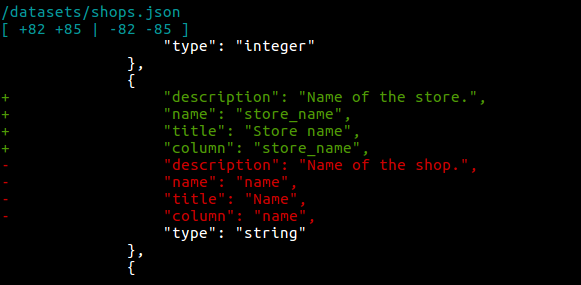

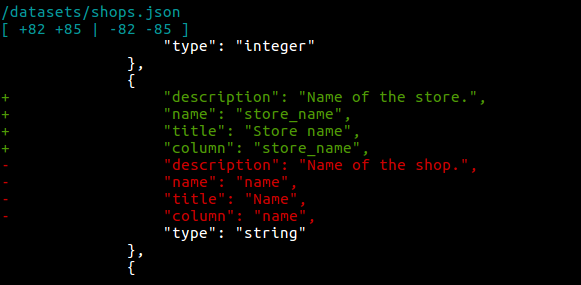

The command outputs sets of changes - "deltas" done in each object. Each object can have multiple deltas, which are followed by header with syntax:

| Code Block |

|---|

|

/{objectType}/{objectName}.json

[ A1 A2 | B1 B2 ]

... |

Where:

A1 = start delta line number in the dump file

A2 = end delta line number in the dump file

B1 = start delta line number in the remote file

B2 = end delta line number in the remote file

Specific output example:

Which means lines 82-85 in the dump object have been added (+) in favor of lines 82-85, which have been removed (−) from the remote object.

![]() At this moment, only dataset type is supported. Datasets are generated from a provided CSV file.

At this moment, only dataset type is supported. Datasets are generated from a provided CSV file. )

)